AI Governance Defined

AI governance is the set of roles, rules, processes, and technical controls that determine how your organization builds, buys, deploys, monitors, and retires AI systems.

Think of it like financial governance: it’s not just an audit. It’s budgeting, approvals, controls, monitoring, and accountability, applied to artificial intelligence.

If you’re using AI to make decisions, generate content, or automate work, you’re already doing AI governance. Just not intentionally.

Why “Just Paperwork” Fails

Many organizations treat AI governance as a compliance checkbox. That’s dangerously insufficient for three reasons:

AI risk is dynamic. Models degrade when inputs change. Vendors update silently. Users discover new failure modes. A static policy can’t detect drift, but operational controls can.

AI failures start small. A chatbot hallucinates slightly more. A hiring tool’s recommendations shift subtly. These look like UX bugs until they become discrimination claims, privacy incidents, or regulatory scrutiny.

Accountability is unclear by default. When something goes wrong, can you answer “who approved this?” Governance establishes ownership, decision rights, and escalation paths before you need them.

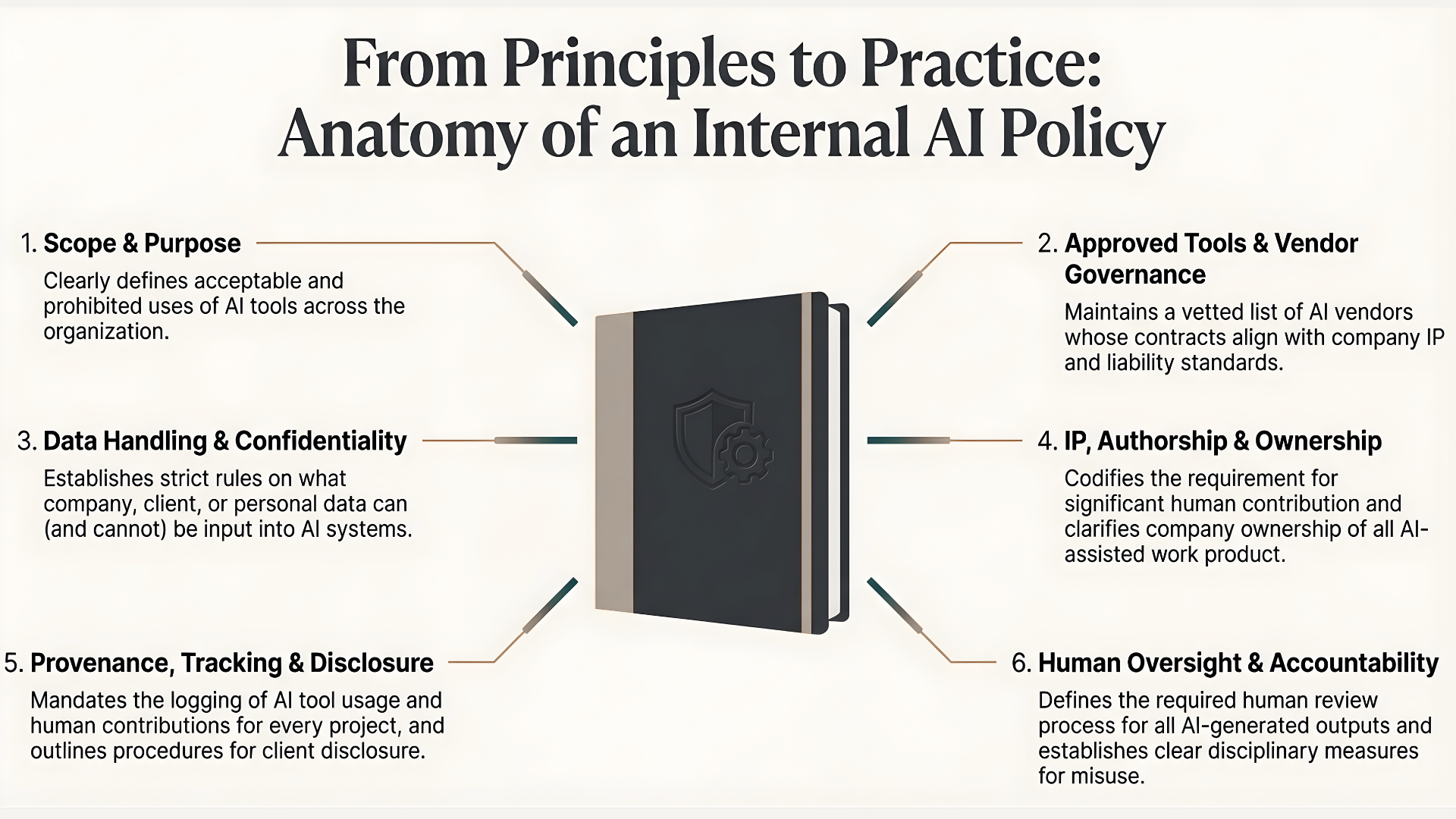

The Five Domains of AI Governance

Effective AI governance spans:

- Strategic governance: Which AI use cases are allowed? What risks will you accept?

- Organizational governance: Who owns each AI system? Who can launch, pause, or retire it?

- Technical governance: Data quality standards, model evaluation, security controls, testing protocols

- Operational governance: Production monitoring, drift detection, incident response, audit logs

- Compliance and ethics: Regulatory alignment, privacy, transparency, fairness

If your program is mostly legal review, you’re missing most of the surface area.

The Business Case for AI Governance

Beyond regulatory pressure, governance delivers tangible value:

- Prevents expensive rework: Building logging, controls, and documentation after deployment costs more than designing them in

- Protects brand reputation: AI mistakes are highly shareable; governance reduces headline risk

- Improves adoption: Reliable AI wins user trust; unreliable AI gets quietly ignored

- Strengthens vendor negotiations: Clear requirements give you leverage on logging, security, and incident terms

Common AI Risks Governance Prevents

- Hallucinations in high-stakes contexts (medical, legal, financial)

- Data leakage via prompts or logs

- Prompt injection attacks

- Shadow AI (employees pasting sensitive data into consumer tools)

- Automation bias (humans over-trusting AI outputs)

- Vendor drift breaking behavior without warning

Start Here: Three Immediate Actions

If you’re early in your AI governance journey:

- Create an AI inventory and assign owners. You can’t govern what you can’t list.

- Adopt risk tiers with minimum controls. Not every chatbot needs the same rigor as an underwriting model.

- Add monitoring and an incident playbook for anything customer-facing or high-impact.

The Bottom Line

AI governance isn’t bureaucracy. It’s the discipline that turns AI from a chaotic experiment into a dependable capability.

If your company is deploying AI without real governance, you’re not moving fast. You’re moving blind.

Ready to Build Your AI Governance Framework?

Most companies we talk to know they need governance. They just don’t know where to start.

Start with a conversation. We’ll assess where you are, identify your highest-risk gaps, and outline what a comprehensive framework would look like for your specific situation.

Need AI Compliance Help?

Let us help you navigate AI compliance with confidence and clarity.

Get in Touch